Uncertainty estimates?¶

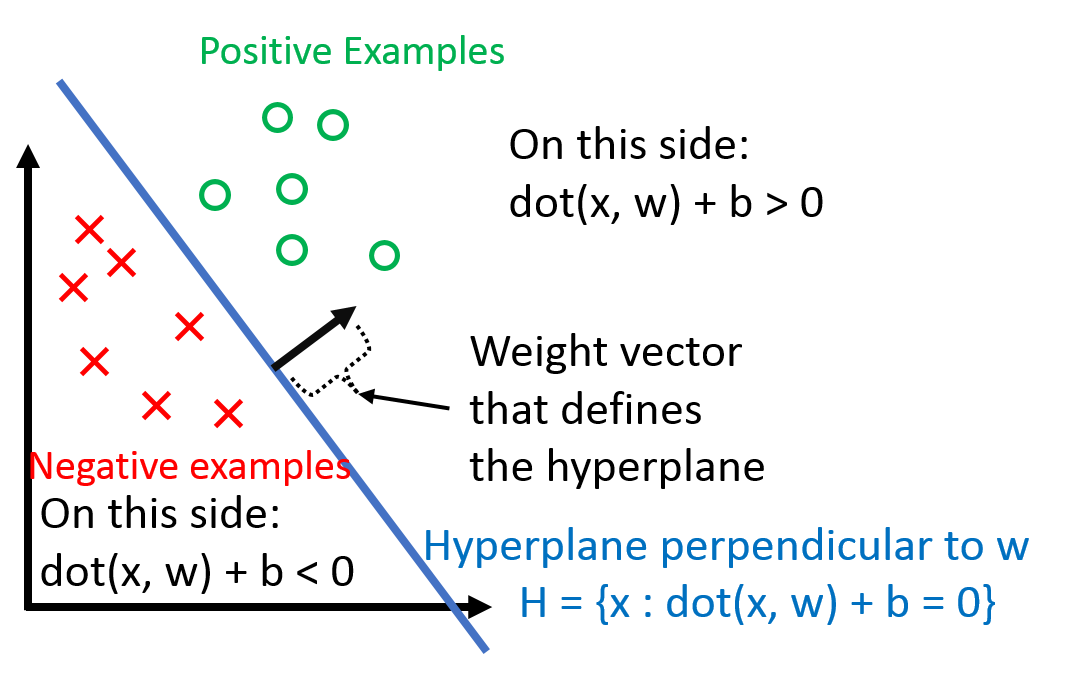

The perceptron gives scores that only mean that one instance is more negative/positive than another. No notion of certainty/probability.

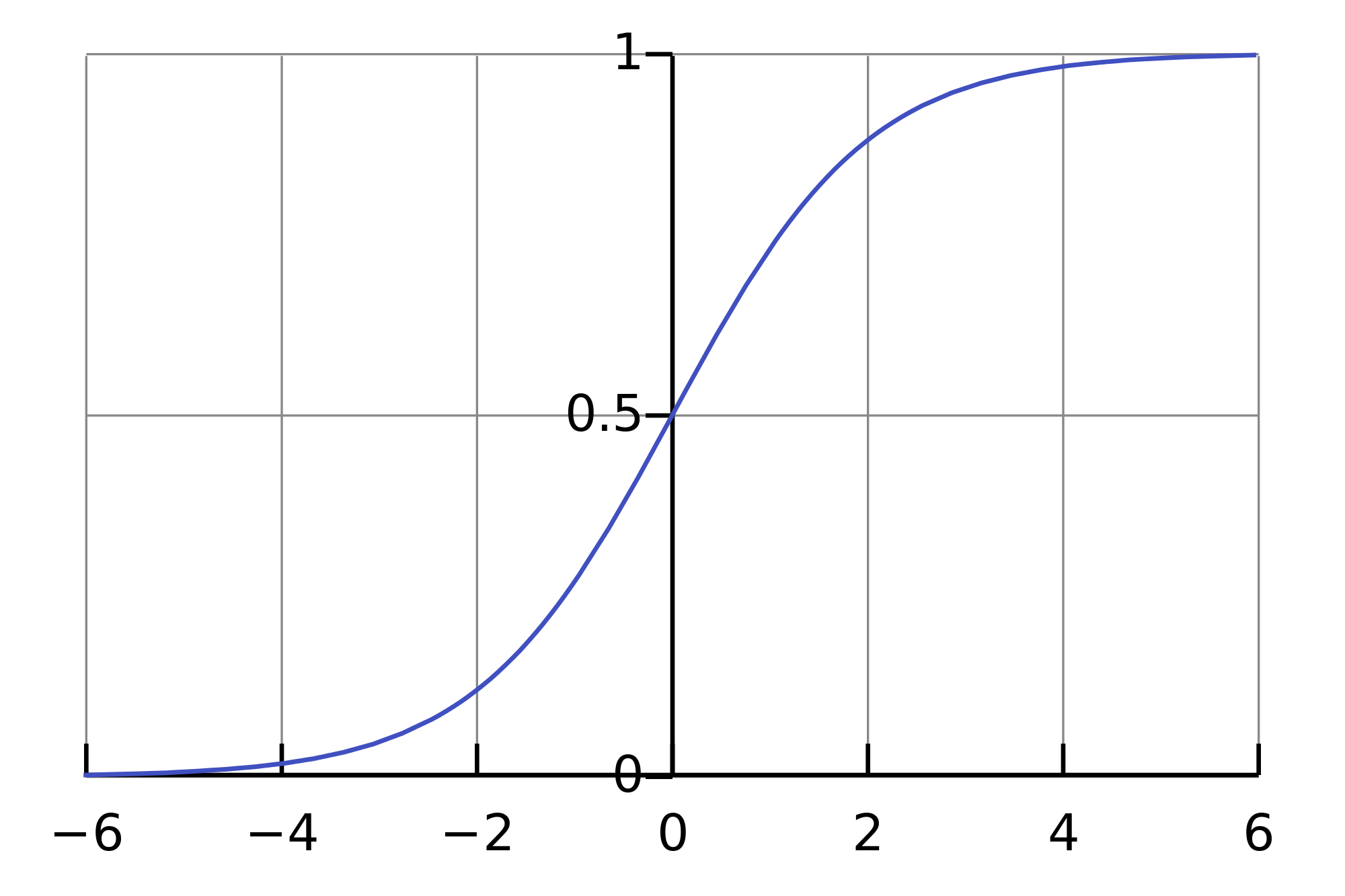

The sigmoid function $\sigma$ "squishes" any real number between 0 and 1:

Logistic regression: $P(y = 1) = \sigma(\mathbf{w} \cdot \mathbf{x})$

Multiclass classification?¶

Not all classifications we need are binary:

- topic genres

- fine-grained sentiment classification

- essay scoring according to different aspects

The binary percepton cannot do it...

Many of them together though can!

One against all¶

- Training: one binary classifier for each class against the rest

- Testing: apply all classifiers, the highest scoring one wins

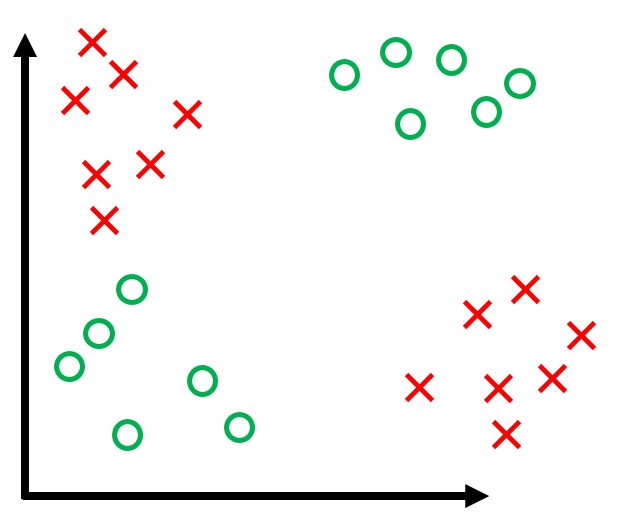

Non-linear classification¶

- Linear classifiers (perceptron, logistic regression, etc.) can't solve this

- Partly responsible for one AI winter

- Neural networks with appropriate architecture can!

Recap¶

- Learned how to represent text with numbers

- How to learn binary linear classifiers with the perceptron

- Got a taste of feature engineering

- Saw some extensions for more advanced classification tasks